AI Audio Analyzer

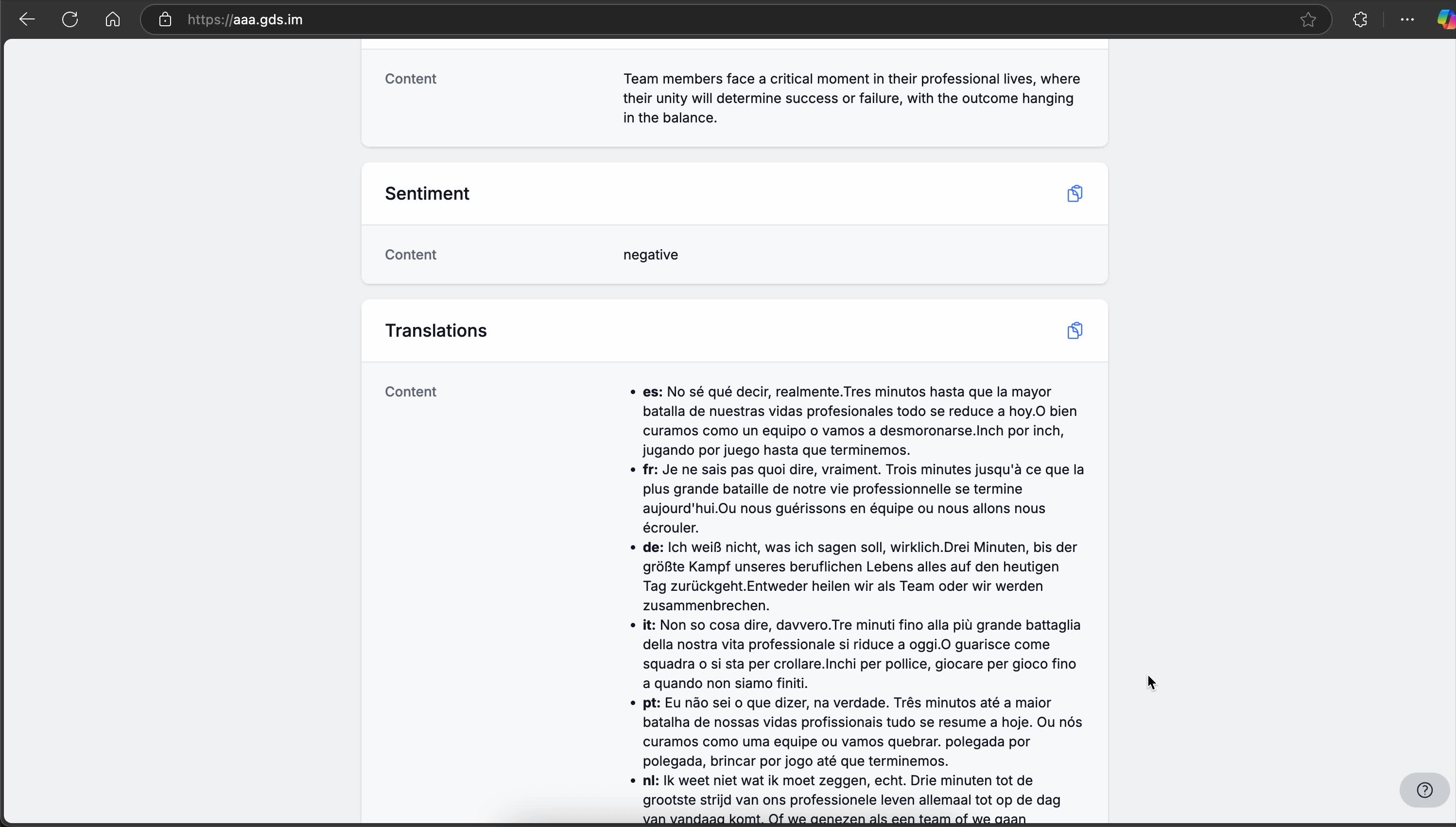

AI Audio Analyzer Created a user-friendly web application that leverages fine-tuned language models to analyze audio files. Users can record or upload their audio files for transcriptions, sentiment analysis, summaries, translations, breakdowns and questioning. This website provides a seamless and efficient way to unlock the insights with the audio data.

Visit here ↗ (opens in a new tab)

Visit here ↗ (opens in a new tab)

An HTTP POST handler that processes audio files, verifies tokens, checks file sizes and types, processes the audio for transcription, sentiment analysis, translation, and other tasks, and returns the results as a JSON response.

Here are the model details, generation configurations, and their explanations for each AI model used in the project:

Transcription Model (Whisper)

Model Name: openai/whisper-large-v3-turbo

Details: This model transcribes audio data into text.

Summarization Model (LLaMA)

Model Name: @cf/meta/llama-3.2-3b-instruct

Generation Configurations:

temperature: 0.1

max_tokens: 150

top_p: 0.3

frequency_penalty: 0

presence_penalty: 0

temperature: Low temperature (0.1) ensures deterministic and focused output, reducing randomness.

max_tokens: Limits the summary to a maximum of 150 tokens for brevity.

top_p: Narrow sampling (0.3) ensures the model selects from the most probable tokens, resulting in more controlled output.

frequency_penalty: No penalty (0) prevents the model from reducing the likelihood of repeated tokens, allowing for natural repetition if necessary.

presence_penalty: No penalty (0) prevents the model from reducing the likelihood of mentioning new information, maintaining focus on the input text.Sentiment Analysis Model (Twitter RoBERTa)

Model Name: cardiffnlp/twitter-roberta-base-sentiment-latestTranslation Model (M2M100)

Model Name: @cf/meta/m2m100-1.2b

Generation Configurations:

temperature: 0.1

top_p: 0.3

max_length: 1024

truncation: true

temperature: Low temperature (0.1) ensures deterministic and focused output, reducing randomness.

top_p: Narrow sampling (0.3) ensures the model selects from the most probable tokens, resulting in more controlled output.

max_length: Limits the translation to a maximum of 1024 tokens to ensure completeness without excessive length.

truncation: Ensures the text is truncated to fit within the specified maximum length, preventing excessively long outputs.Breakdown Model (Gemini)

Model Name: gemini-1.5-flash-latest

Generation Configurations:

temperature: 0.1

topP: 0.3

topK: 40

Question Generation Model (LLaMA)

Model Name: llama-3.2-3b-instruct

Generation Configurations:

temperature: 0.1 (initial), 0 (final attempt)

max_tokens: 1024

top_p: 0.5 (initial), 0.3 (final attempt)

frequency_penalty: 0

presence_penalty: 0